When the Ray-Ban Meta Smart Glasses launched last fall, they were a pretty neat content capture tool and a surprisingly solid pair of headphones. But they were missing a key feature: multimodal AI. Basically, the ability for an AI assistant to process multiple types of information like photos, audio, and text. A few weeks after launch, Meta rolled out an early access program, but for everyone else, the wait is over. Multimodal AI is coming to everyone.

The timing is uncanny. The Humane AI Pin just launched and bellyflopped with reviewers after a universally poor user experience. It’s been somewhat of a bad omen hanging over AI gadgets. But having futzed around a bit with the early access AI beta on the Ray-Ban Meta Smart Glasses for the past few months, it’s a bit premature to completely write this class of gadget off.

First off, there are some expectations that need managing here. The Meta glasses don’t promise everything under the sun. The primary command is to say “Hey Meta, look and…” You can fill out the rest with phrases like “Tell me what this plant is.” Or read a sign in a different language. Write Instagram captions. Identify and learn more about a monument or landmark. The glasses take a picture, the AI communes with the cloud, and an answer arrives in your ears. The possibilities are not limitless, and half the fun is figuring out where its limits are.

For example, my spouse is a car nerd with their own pair of these things. They also have early access to the AI. My life has become a never-ending game of “Can Meta’s AI correctly identify this random car on the street?” Like most AI, Meta’s is sometimes spot-on and often confidently wrong. One fine spring day, my spouse was taking glamour shots of our cars: an Alfa Romeo Giulia Quadrifoglio and an Alfa Romeo Tonale. (Don’t ask me why they love Italian cars so much. I’m a Camry gal.) It correctly identified the Giulia. The Tonale was also a Giulia. Which is funny because, visually, these look nothing alike. The Giulia is a sedan, and the Tonale is a crossover SUV. It’s really good at identifying Lexus models and Corvettes, though.

I tried having the AI identify my plants, all of which are various forms of succulents: Haworthia, snake plants, jade plants, etc. Since some were gifts, I don’t exactly know what they are. At first, the AI asked me to describe my plants because I got the command wrong. D’oh. Speaking to AI in a way that you’ll be understood can feel like learning a new language. Then it told me I had various succulents of the Echeveria, aloe vera, and Crassula varieties. I cross-checked that with my Planta app — which can also identify plants from photos using AI. I do have some Crassula succulents. As far as I understand, there is not a single Echeveria.

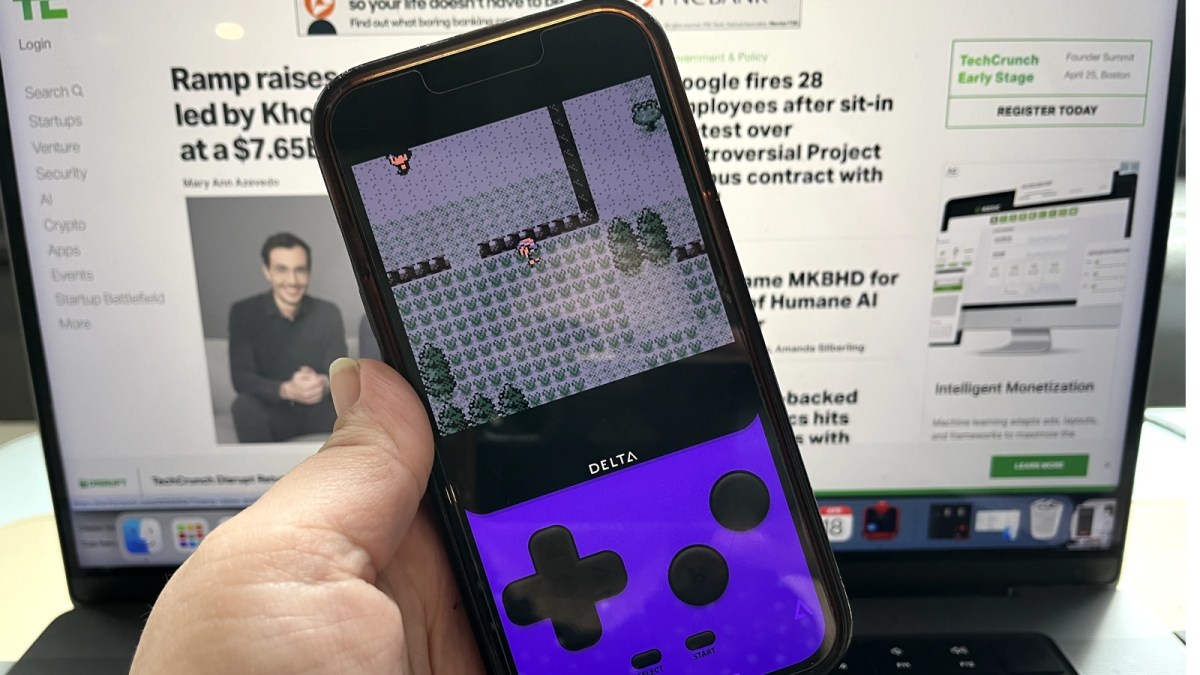

Photo by Victoria Song / The Verge

The peak experience was when, one day, my spouse came thundering into my office. “Babe!!! Is there a giant fat squirrel in the neighbor’s backyard?!” We looked out my office window and lo and behold there was, in fact, a large rodent ambling about. An unspoken contest began. My spouse, who wears a pair of Ray-Ban Meta Smart Glasses as their daily glasses, tried every which way to Sunday to get the AI to identify the critter. I pulled out my phone, snapped a photo, and went to my computer.

I won. It was a groundhog.

In this instance, the lack of a zoom is what did the glasses in. It was able to identify the groundhog once my spouse took a picture of the picture on my phone. Sometimes it’s not whether the AI will work. It’s how you’ll adjust your behavior to help it along.

To me, it’s the mix of a familiar form factor and decent execution that makes the AI workable on these glasses. Because it’s paired to your phone, there’s very little wait time for answers. It’s headphones, so you feel less silly talking to them because you’re already used to talking through earbuds. In general, I’ve found the AI to be the most helpful at identifying things when we’re out and about. It’s a natural extension of what I’d do anyway with my phone. I find something I’m curious about, snap a pic, and then look it up. Provided you don’t need to zoom really far in, this is a case where it’s nice to not pull out your phone.

It’s more awkward when trying to do tasks that don’t necessarily fit into how I would already use these glasses. For example, mine are sunglasses. I’d use the AI more if I could wear these indoors, but as it is, I’m not that kind of jabroni. My spouse uses the AI much more because they have theirs with transition lenses. (And they’re just really into prompting AI for shits and giggles.) Plus, for more generative or creative tasks, I get better results doing it myself. When I asked Meta’s AI to write a funny Instagram caption for a picture of my cat on a desk, it came up with, “Proof that I’m alive and not a pizza delivery guy.” Humor is subjective.

But AI is a feature of the Meta glasses. It’s not the only feature. They’re a workable pair of livestreaming glasses and a good POV camera. They’re an excellent pair of open-ear headphones. I love wearing mine on outdoor runs and walks. I could never use the AI and still have a product that works well. The fact that it’s here, generally works, and is an alright voice assistant — well, it just gets you more used to the idea of a face computer, which is the whole point anyway.

Related:

Michael Johnson is a tech enthusiast with a passion for all things digital. His articles cover the latest technological innovations, from artificial intelligence to consumer gadgets, providing readers with a glimpse into the future of technology.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25012282/236834_Ray_Ban_Meta_Smart_Glasses_AKrales_0608.jpg)